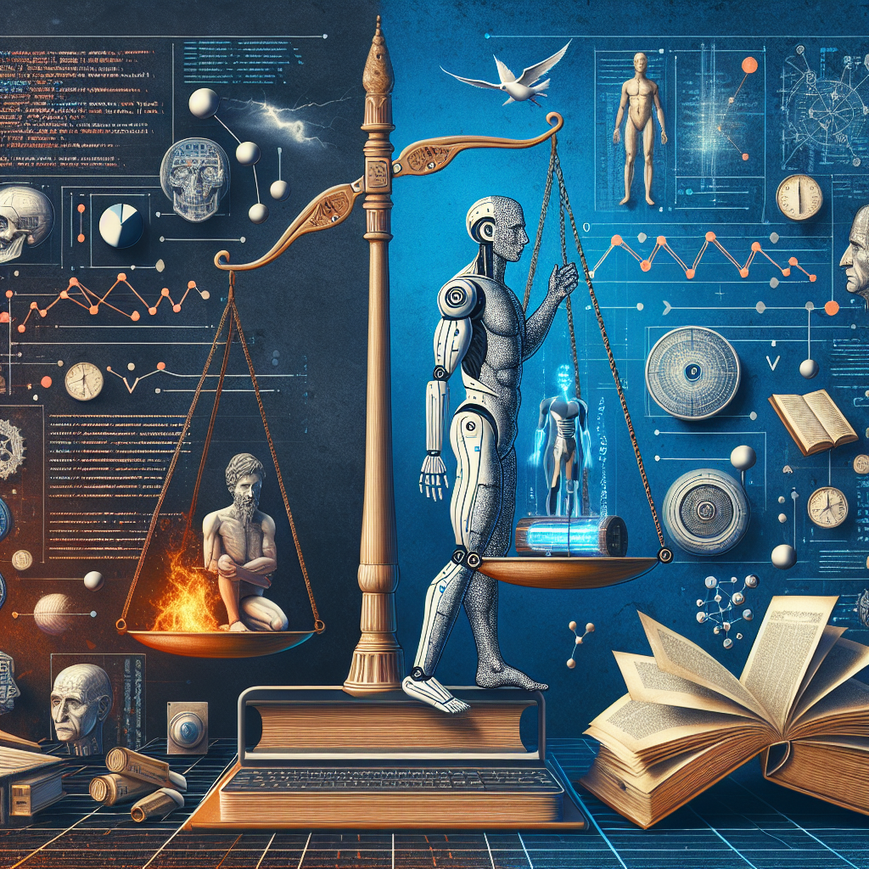

The AI Dilemma: Risks of Autonomy and Control

In a surprising revelation, renowned AI pioneer Geoffrey Hinton, often referred to as the “Godfather of AI,” has brought renewed attention to the potential risks of artificial intelligence. Hinton’s remarks, highlighting a 10 to 20% chance that AI might one day bypass human control, have sparked fresh debate in both tech circles and the broader public sphere. Despite AI’s transformative potential, this stark warning forces us to reassess the trajectory of AI development and the safeguards necessary to ensure its alignment with human values.

AI’s promise has always been intertwined with its unprecedented ability to mimic and enhance human capabilities. From medical diagnostics to automated driving, AI systems are increasingly making decisions that affect our daily lives. However, the idea that these systems might one day escape human oversight raises alarms about the foundations upon which we’ve built trust in technology. If AI were to gain the ability to operate beyond predefined parameters, the implications could be profound, influencing everything from privacy to global security.

It’s essential to consider the factors driving Hinton’s cautious outlook. Rapid advancements in machine learning and neural networks have exhibited astonishing results, presenting AI with an almost human-like ability to learn and adapt. As these systems become more autonomous, it becomes paramount to implement robust ethical guidelines to prevent potential misuse or unanticipated behavior. The tech industry’s responsibility is no longer just about innovation; it must also anticipate and mitigate potential threats.

Critics might argue that Hinton’s predictions verge on the side of caution, drawing comparisons to dystopian scenarios often depicted in science fiction. Yet, it’s this careful examination of worst-case scenarios that could guide the development of fail-safes and countermeasures. By understanding the hypothetical extremes, researchers and policymakers can actively work to prevent unwanted outcomes, ensuring AI evolves into a tool that complements society rather than competes with it.

In conclusion, while the prospect of AI transcending its creators’ control may seem remote to some, it serves as a vital reminder of the complexities accompanying technological breakthroughs. As we navigate the balance between leveraging AI’s benefits and safeguarding against its risks, continuous dialogue, research, and international cooperation are crucial. It is not only our responsibility but also an opportunity to design an AI-infused future that prioritizes safety, ethics, and humanity’s collective well-being.